.jpg)

The modern digital world is characterized by a rapid pace, so downtime in a database can spell disaster for businesses that rely on these systems to store and process important data. Whether it's a brief interruption or a complete system failure, the consequences can be severe, resulting in lost revenue, decreased productivity, and shattered customer trust. Luckily, there are proven practices that organizations can adopt to minimize downtime and ensure their databases run smoothly. As the famous American playwright Tennessee Williams once wrote: “Time is the longest distance between two places.”

For any organization, human resources and data are the two most valued assets. Data is the new oil, and in many ways, the new gold too. The success of any organization hinges on how effectively it navigates the volume, velocity and variety of data. If a financial website is down for a minute, the estimated loss could be 100 million dollars or even more.

India suffered the colossal impact of internet shutdowns in 2020, adding up to 8,927 hours and $2.8 billion in losses. Out of 21 countries that curbed internet access in 2020, the economic impact seen in India was more than double the combined cost for the next 20 countries in the list.

.jpg)

A Lowdown on How The Process Works

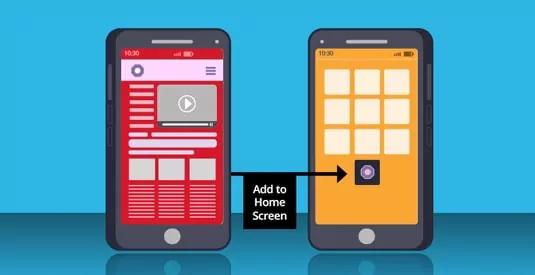

To make data available 24x7 in database technologies, many key points are generally available like Disaster Recovery and High availability. These two factors are achieved through tools like Replication, Log Shipping, Mirroring and more. These tools are database-specific. But Replication is available in almost all databases. Replication is a process by which data from a source database is copied to multiple target databases. This helps to ensure data availability even if the source database is unavailable, as users can access the data from the target databases. This also helps to improve performance, as the data can be distributed across multiple databases.

In replication technology, the primary data source is called as the publisher and the other end is known as the subscriber popularly known as the PUB SUB (Publish Subscribe) configuration. Depending on the severity and sensitivity of data, we are achieving the HA through PUB-PUB, PUB-SUB, Multiple PUB and Multiple PUB, Single PUB and Multiple SUB.

The primary configuration lies behind taking a backup of the Publisher which should be down, i.e. no user activities on the Publisher, when taking a backup as the database is not accessible to the public, which may incur a financial loss.

.jpg)

How to Minimize Downtime

Organizations can adopt the below best practices to minimize downtime and ensure that their databases run smoothly and seamlessly.

Implementing High-Availability Architectures:

A powerful strategy for reducing downtime involves implementing high-availability architectures. This approach includes setting up redundant systems and employing failover mechanisms that automatically switch to backup servers if a failure occurs. Technologies such as database clustering, load balancing, and replication distribute the workload across multiple servers, guaranteeing uninterrupted access to data even if one server goes down.

Regular Database Maintenance:

Regular maintenance is critical to keep databases performing optimally and prevent unexpected downtime. Tasks like optimizing indexes, purging unnecessary data, and checking database integrity help identify and resolve potential issues before they become major problems. By proactively monitoring the database's health, including disk space, CPU usage, and memory consumption, administrators can swiftly take corrective actions.

Effective Backup and Recovery Strategies:

Having robust backup and recovery strategies is paramount to mitigate the impact of unforeseen events. Regularly backing up databases and securely storing backups offsite ensures that data can be quickly restored in the event of a disaster or system failure. Periodically testing the restoration process is equally important to ensure the reliability and integrity of the backups.

Utilizing Database Monitoring Tools:

Database monitoring tools offer real-time insights into the performance and health of databases. These tools provide administrators with detailed metrics, alerts, and performance trends, empowering them to identify potential issues early on and take necessary actions. Monitoring tools also assist in pinpointing bottlenecks, optimizing queries, and effectively allocating resources, thereby reducing the likelihood of downtime caused by performance issues.

Conducting Regular Performance Tuning:

Database performance tuning aims to optimize query execution, resource allocation, and system configurations to enhance overall efficiency. By identifying and fine-tuning slow-running queries, indexing frequently accessed data, and optimizing database configurations, organizations can significantly improve system responsiveness and minimize the risk of downtime.

How CSM Tech nailed it – Bihar Aadhaar Authentication Framework (BAAF)

In our case, the Bihar Aadhaar Authentication Framework (BAAF) work powered by MySQL Enterprise Edition 5.7, the magnitude of the database was 250 GB+ and taking offline backup and configuration had an estimated 9-10 hours of downtime.

We have implemented a hot backup of the database through MySQL Enterprise Manager (MEB) with zero downtime, which leads to no hassles for the end user. The best part is that when the configuration and set-up of the PUB SUB were in process, the above-said website was available for public access.

Reducing database downtime requires a proactive approach and the adoption of best practices. By implementing high-availability architectures, performing regular maintenance, establishing effective backup and recovery strategies, utilizing monitoring tools, and conducting performance tuning, organizations can greatly enhance database uptime. Emphasizing these practices not only minimizes the risk of downtime but also ensures smooth business operations, maintains customer satisfaction and keeps businesses competitive in today's data-driven landscape.

We will verify and publish your comment soon.